IT Pro Verdict

The FX2 takes the concept of modular servers to the next level and is ideal for data centres that want a rack dense solution that can be easily customised to suit their workloads. It’s much more flexible than a blade server, supports a good range of server nodes and has excellent remote management features.

Pros

- +

Big choice of server blocks; Good rack density; Highly scalable design; Total remote management

Cons

- -

No CMC redundancy

Dell's PowerEdge FX2 claims to have re-invented the rack server. This modular server offers enterprises a flexible means of matching infrastructure with workload and allows them to scale up easily with demand. It fills the gap between Dell's VRTX and M1000 by delivering a more flexible, rack dense server solution.

The modular server concept is far from new as both HP and Supermicro have been at it for years. Supermicro came first with its low-cost Twin, Twin and FatTwin products while HP has its Scalable Server portfolio comprising the SL2500 and venerable SL6500.

Dell may have taken longer to jump on the bandwagon but a major difference is it's done it better. The FX2 offers a bigger choice of compute nodes and I/O options than the competition while chassis and node management are also far superior.

This 2U rack chassis supports a wide selection of server, storage and I/O modules, or blocks, as Dell calls them. Similar to the VRTX, the FX2 has a passive mid-plane which links the server blocks to networking, power and centralised management services.

The FC630 server block packs a lot of hardware including dual E5-2600 v3 Xeons

What's in the chassis?

Dell offers two different chassis versions and features common to both are support for a range of half-width, quarter-width or full-width server blocks. They also have dual hot-plug PSUs, two I/O block bays and a CMC (central management controller).

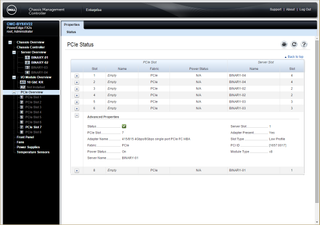

On review is the FX2s (switched) model which has eight PCI-e 3.0 slots linked to a PCI-e switch on the mid-plane and pre-configured to map two slots to each server block. Note the slots are only warm-swappable' so the associated server block must be powered down prior to adding or removing a card.

The FX2 version doesn't have any PCI-e slots and is designed specifically for Dell's PowerEdge FM120x4 half-width server blocks. These Atom C2000 SoC based blocks don't have PCI-e connectivity but each contain four separate servers and are designed to provide a low-cost, high density solution that's easy on the power.

The chassis has room for two I/O connector blocks and Dell currently has 8-port Gigabit and 10GbE pass-through modules allowing both NICs on each server to be connected directly to external switches. Three I/O aggregator blocks are on the cards with the FN410S and FN410T offering four external 10GbE SFP+ or copper ports while the FN2210S has four combo ports providing converged FCoE capabilities.

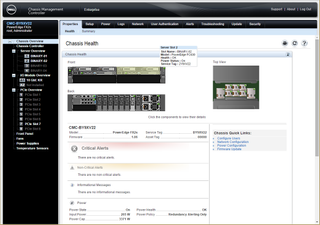

Dell's CMC provides a well-designed management console that's consistent with its VRTX and M1000e servers

Blocking maneuvers

Dell is releasing five server blocks and a dedicated storage block for the FX2 but you'll have to be patient as most won't be available until early 2015. For now, you have the FM120x4 Atom block and the FC630 which supports the latest E5-2600 v3 Xeons.

Our review system was supplied with a pair of half-width FC630 blocks and Dell has done a fine job packing the hardware in. These dual socket servers came equipped with E5-2690 v3 Xeons mounted with chunky passive heatsinks.

The CPU sockets are positioned down the centre of the motherboard and flanked by a total of 24 DIMM slots supporting up to 768GB of DDR4 memory. The board has a mezzanine card slot at the back which links up with the chassis' PCI-e mid-plane switch and whichever cards are fitted in its mapped slots.

Standard storage is limited to two hot-swap SFF hard disks but there is a version with room for eight 1.8in. SSDs. These are managed by an embedded PERC H730 Mini RAID controller and along with dual 10GbE NICs, the board has two SD memory card slots which support a mirrored mode for hypervisor redundancy.

The chassis' eight PCI-e slots are mapped to each server block and accepted our industry standard cards

Centralised management

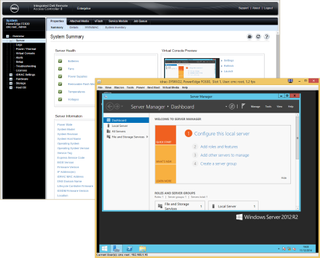

Dell has maintained consistency for remote management as the CMC is basically the same as that on the VRTX and M1000e. Its first network port is for management access while the second port is used to daisy-chain up to 20 FX2 systems allowing them to be managed as a single entity.

Our only issue is the lack of redundancy as the CMC is responsible for monitoring the entire system, managing cooling and controlling the fans. The system won't go belly-up if the CMC fails but when we removed it, the eight chassis fans went into turbo mode until it was replaced.

The CMC web interface is, indeed, very similar to that of the VRTX. It opens with graphical views of all components, installed server and I/O blocks plus any PCI-e cards along with their slot mappings.

We could monitor and control chassis power, disable the chassis power button and apply power capping values to the entire system. Individual server blocks can be monitored and controlled from the CMC interface which also provides a direct link to their own onboard iDRAC8 management controllers.

Each server iDRAC8 can be accessed directly from the CMC for monitoring and remote control

Conclusion

It may have taken Dell a while to latch on to the appeal of modular servers but at least it's done it in style. Having reviewed many competing products from HP and Supermicro we can safely say the FX2 is the best example of this technology we've seen so far.

Verdict

The FX2 takes the concept of modular servers to the next level and is ideal for data centres that want a rack dense solution that can be easily customised to suit their workloads. It’s much more flexible than a blade server, supports a good range of server nodes and has excellent remote management features.

Chassis: 2U rack

Power: 2 x 1600W hot-plug PSUs (1100W option)

Cooling: 8 x hot-plug fan modules

Node bays: 4 x 1U half-width (max. 8 x quarter-width)

Management: Dell CMC

Network: 2 x I/O module bays

Expansion: 8 x PCI-Express 3.0 slots

FC630 server node (max 4)

CPU: 2 x 2.6GHz Xeon E5-2690 v3

Memory: 64GB DDR4 (max 768GB)

Storage: 2 x 300GB SAS 10K SFF hard disks

RAID: Dell PERC H730 Mini/2GB cache

Array support: RAID0, 1, 10, 5, 6, 50, 60

Network: 2 x Broadcom 10GbE NICs

Expansion: FC PCI-e Mezzanine Adapter

Management: Dell iDRAC8 Enterprise

Warranty: 3yrs on-site NBD

Dave is an IT consultant and freelance journalist specialising in hands-on reviews of computer networking products covering all market sectors from small businesses to enterprises. Founder of Binary Testing Ltd – the UK’s premier independent network testing laboratory - Dave has over 45 years of experience in the IT industry.

Dave has produced many thousands of in-depth business networking product reviews from his lab which have been reproduced globally. Writing for ITPro and its sister title, PC Pro, he covers all areas of business IT infrastructure, including servers, storage, network security, data protection, cloud, infrastructure and services.