The D2D4000 carries out deduplication at the appliance enabling it to handle any backup software and data format. Similar to many solutions, it breaks the data stream into 4K blocks, or chunks, and computes hashes for each one, which are then stored in an index on the appliance.

To test deduplication and LBR we used a pair of D2D4000 appliances with one designated as a source and the second acting as a remote target. We placed a Network Nightmare WAN simulator between them configured for a 2Mbps link speed.

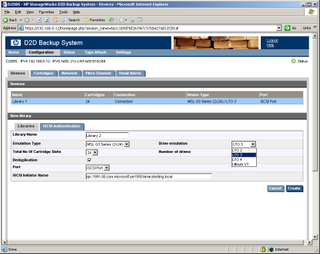

When creating VTLs you can choose from a range of LTO tape devices and library emulations.

At our local site we used a Dell PowerEdge 2900 quad-core Xeon domain controller running Windows Server 2003 and Exchange Server 2003. Symantec Backup Exec 12.5 managed local backup operations to the source appliance and we installed this on a Dell PowerEdge 1950 quad-core Xeon system running Windows Server 2003 file services and SQL Server 2005.

Installation is a swift affair as you run a wizard on each host system which installs the LTO tape drivers, configures the iSCSI initiator and discovers the D2D4000 appliance. You don't even need to create a VTL and this can be done automatically when the appliance detects an initiator logging on to it.

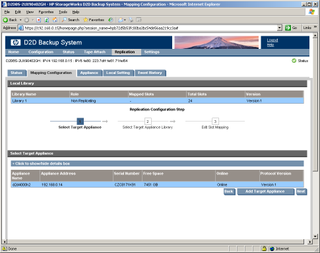

Replication setup only needs to be carried out from the source appliance and can be completed in as few as four steps.

Replication configuration is a four step process carried out only at the source appliance where you choose a source VTL, enter the address of the remote appliance and select a target VTL for replication. Black-out windows are used to control when replication is allowed to run and you decide how much of the WAN link it can use.

We used our standard test suite that looks at deduplication performance for file server, SQL Server and Exchange server services and used a 50GB data set for each application. Our simulated one-month backup period used a standard cycle of weekly full and daily incremental or differentials as dictated by best practices for each application.

Dave is an IT consultant and freelance journalist specialising in hands-on reviews of computer networking products covering all market sectors from small businesses to enterprises. Founder of Binary Testing Ltd – the UK’s premier independent network testing laboratory - Dave has over 45 years of experience in the IT industry.

Dave has produced many thousands of in-depth business networking product reviews from his lab which have been reproduced globally. Writing for ITPro and its sister title, PC Pro, he covers all areas of business IT infrastructure, including servers, storage, network security, data protection, cloud, infrastructure and services.