You'd be hard pushed to have a conversation about technology today that doesn't mention artificial intelligence (AI) at some point. The technology has massive potential to shape and enhance our working and personal lives, but for all the pros, some remained worried about the downsides.

With talk of job loss a result of increased use of AI, should we be that concerned?

Perhaps not if analyst firm Gartner's predictions are to be believed. It reckons that AI will eliminate 1.8 million jobs. That's the bad news. The good news is that AI will also be responsible for creating 2.3 million jobs by 2020. Indeed, Gartner believes "2020 will be a pivotal year in AI-related employment dynamics."

"Many significant innovations in the past have been associated with a transition period of temporary job loss, followed by recovery, then business transformation and AI will likely follow this route," said Svetlana Sicular, research vice president at Gartner.

"Unfortunately, most calamitous warnings of job losses confuse AI with automation that overshadows the greatest AI benefit AI augmentation a combination of human and artificial intelligence, where both complement each other."

Keep up with all the latest AI news with our hub below.

Latest AI news

09/09/2019: Microsoft's AI editor shows racial bias on debut

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

Microsoft's decision to replace journalist with AI on the MSN home page appears to have backfired within just a week.

As reported by The Guardian, the AI software the company is using to curate stories illustrated an article about racism with an image of the wrong band member of Little Mix.

A story about Jade Thirlwall's personal reflections on racism was illustrated with a picture of her fellow band member, Leigh-Anne Pinnock. What's more, despite its human editors correcting the image, the software is also said to be surfacing reports of the mistake on the home page.

The error, which was first spotted on Friday, couldn't have come at a worse time as people across the UK and US are taking to the streets to protest racial injustice. It also follows Microsoft's work to restructure its AI policies, aimed at preventing mishaps of this kind.

Thirlwall, who has been attending recent Black Lives Matter protests in London, criticised MSN for the post, calling it ignorant, unaware that the error was made by artificial intelligence.

"@MSN If you're going to copy and paste articles from other accurate media outlets, you might want to make sure you're using an image of the correct mixed-race member of the group," she said.

At the start of June, contracted journalists were told they would no longer be employed by Microsoft to curate news on the MSN home page. Microsoft doesn't carry out any original reporting or content creation, but it employs human editors to select, edit and repurpose articles from certain publications which it shares advertising revenue with.

With the coronavirus pandemic hitting media jobs hard, the company began terminating the contracts for dozens of its temporary journalists, according to The Guardian, with the aim of replacing them with AI. A number of those whose contracts were terminated suggested the tech giant had already begun using software to curate stories on the website.

The human editors are thought to be unable to prevent the robot editor from selecting stories from other sites, according to The Guardian, as such editors have been told to stay alert and delete any version of the Little Mix article that software selects.

IT Pro has approached Microsoft for comment, but a staff member told The Guardian that the company was deeply concerned about reputational damage to its AI product.

"With all the anti-racism protests at the moment, now is not the time to be making mistakes," they said.

09/09/2019: UK has the second-highest number of AI companies in the world

Investment in artificial intelligence has reached a record high in the UK, as Britain's 'scaleups' look to use smart technology to tackle issues such as climate change and fake news.

According to the latest Tech Nation report into such investment, in the first six months of the year, funding for the growth of AI-based companies in the UK hit an all-time high.

Already, investment in AI scaleups (companies that have grown from startups and are now progressing toward becoming larger companies), has hit $1.6 billion in 2019, whereas in 2018 investment hit $1.02 billion in total.

The UK is second in the world when it comes to the number of AI companies in the nation, with only the US ahead of it. It's also third in the world when it comes to raising money for AI, sitting behind the US and China. Britain's investment into AI companies is double that of France, Germany and the rest of Europe, according to Tech Nation.

These figures come at a time when Tech Nation has revealed a new cohort of 29 companies that have made it onto the UK's Applied AI growth programme, which aims to propel the growth of startups into scaled-up companies.

With these AI companies located across the UK in cities such as Bournemouth, Bristol, Cambridge, Cardiff, Exeter, Gateshead, Glasgow, Manchester and Oxford, it shows that AI investment is not just centred around startups in London.

"For the UK to maintain its authority in AI, we need to nurture scalable, globally-competitive, homegrown AI companies that solve real problems," said Harry Davies, Tech Nation's Applied AI lead.

"Yet, the pool of AI-focused companies that achieve this beyond Series A remains slim, despite the hype, and the path to scale is uniquely challenging. That is why it is so important that we champion our most promising UK AI companies with the greatest potential for growth as they look to scale and that's what this group represents: 29 of the UK's most exciting AI-focused startups on the path to building impactful and scalable companies, all chosen by a panel of expert judges."

13/05/19: Google fuels socially-conscious AI technology with $25 million fund

Google has provided $25 million worth of grants to a suite of organisations that are using machine learning and artificial intelligence technology to tackle challenges in society.

The search giant has provided portions of this significant sum to the likes of the Rainforest Connection in the US, which will use machine learning to analyse sensors and audio data to detect sounds associated with illegal logging, as well as the Crisis Text Line, which provides a service that uses natural language processing to predict when a person is depressed and take action to help them in a faster fashion.

The finding stems from Google's AI for Social Good programme, which was announced last year and, according to VentureBeat, saw some 2,600 applicants from across 119 countries.

Those organisations that were deemed worthy of a portion of the grant will receive funding ranging from $500,000 to $2 million.

Other recipients of a slice of Google funding include the New York City fire department, which, through working with New York University, aims to use AI technology to reduce the time it takes to respond to some 1.7 million emergency calls.

Meanwhile, Columbia's Colegio Mayor de Nuestra Seora del Rosario will use its share of the funding to harness computer vision technology and satellite imagery to detect illegal mining operations that contaminate local drinking water.

While Google has a reputation of using AI technology to enhance its own services and business operations, this funding acts as an example that the search giant also wants to see cutting-edge technology used to benefit society as well as itself and its customers.

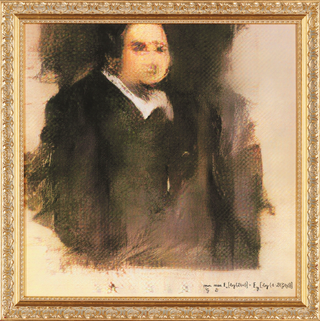

26/10/2018: Christie's sell portrait that puts the 'art' into artificial intelligence

A beautiful, but mysterious painting has sold at Christie's for $432,000. The face of the subject is somewhat blurred and the artist signature is just an algorithm.

This is because the artist is an algorithm. The Portrait of Edmond Belamy is the first work of art created by artificial intelligence to be sold at auction.

The painting is part of a collection of portraits depicting the fictional Belamy family, created by artists and machine learning researchers from French AI startup Obvious.

The Portrait of Edmond Belamy - courtesy of Obvious

Childhood friends, Gauthier Vernier, Pierre Fautrel and Hugo Caselles-Dupre have created the artworks using generative adversarial networks (GANs). This is a model of machine learning, created by Ian Goodfellow in 2014, which puts two algorithms in competition with one another to perform training.

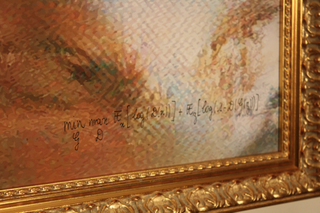

The team has created a whole series of 11 realistic portraits, using the GANs model, all fitted in 70 x 70 cm golden frames. The family name 'Belamy' is in reference to Goodfellow, who created the GANs model - Goodfellow roughly translates to "Bel ami" in French and the algorithm signature is the formula for the loss function of the original GAN model.

Signature on 'Le Comte de Belamy' - courtesy of Obvious

From the dataset of images, a generator created a new image by mimicking the style, in this example, of paintings from the 14th to the 18th century. The machine continued to work on the paintings until a second machine, called a 'discriminator' could not tell the difference from the artwork created and those it mimicked.

Remarkably, all of the portraits have blurred faces and seemingly blank backgrounds, lending a contemporary feel to more renaissance style painting, but this is not unintentional.

"It is an attribute of the model that there is distortion," said Caselles-Dupr. "The Discriminator is looking for the features of the image - a face, shoulders - and for now it is more easily fooled than a human eye."

Portrait of La Comtesse de Belamy - courtesy of Obvious

"We did some work with nudes and landscapes, and we also tried feeding the algorithm sets of works by famous painters. But we found that portraits provided the best way to illustrate our point, which is that algorithms are able to emulate creativity."

19/10/2018: Close encounters of the Google Cloud kind

Google has partnered up with NASA's Frontier Development Lab (FDL) to answer one of the universe's fundamental questions: are we alone?

The tech giant's cloud computing arm is working with NASA's applied research program, which has been set up to answer the challenging questions beyond our skies.

Over the summer, one question, in particular, has called for a collaboration with one of Earth's largest providers of cloud computing, Google. Helping the FDL's 2018 Astrobiology mission, which looks to simulate and classify the possible atmospheres of planets outside our sun's solar system in the search for signs of life.

"With the advent of even more capable telescopes such as TESS, scientists will face ever greater challenges processing the sheer magnitude of image and radio data sent back to Earth," said Massimo Mascaro, technical director at Google's applied AI division.

"Thus, it becomes even more important to have tools that can both analyze and interpret the locations of distant planets in all of this data. We're thrilled to be partnering with NASA FDL to answer the fundamental question: are we alone?"

The search for aliens is extremely challenging, given space is infinite and also a deadly environment to humans. Add to this the massive economic cost of sending people and equipment into space, plus the limitations of that technology and it quickly becomes clear the scale of finding other life forms.

However, looking into the vacuum of space and it's world's from a telescope also presents a problem: data. The many possible permutations of temperature, atmospheric pressure and elements present on a planet's surface can influence the life forms that might be able to survive in an environment and it's quite a considerable amount of data.

Mapping this out, the Astrobiology missions sponsored by Google used two approaches to researching the biologies of these distant planets. Team one focused on simulating the environmental and atmospheric properties of planets as they might diverge from those of the Earth. This team used Google Compute Engine with Atomos in a Docker container to simulate multiple parameters like elemental composition and pressure for these planetary environments, all at the same time.

The goal was to discern a generalised biological theory of atmosphere dynamics on planets similar to Earth that are outside our solar system, to assess their potential to host aliens.

Team two built a machine learning algorithm for analysing rocky or terrestrial exoplanets (planets outside the solar system). Using this new tool, the team developed a process dubbed INARA, which sifts through the massive high-resolution imagery datasets provided by telescopes to identify spectral signatures from planets atmospheres.

Essentially, Google has gone from internet search engine to space search engine, as its helping to accelerate the hunt for life beyond our galaxy.

24/09/2018: British Army prepares for future urban battlefields with AI technology

The British Army has trialled an artificial intelligence system that can scan for hidden attackers in urban battlefields.

The technology, developed by the Defence Science and Technology Laboratory (Dstl) and a UK industry partnership called SAPIENT, uses autonomous sensors to scan a hostile and complex area, such as a city, to flag up potential dangers and make tactical decisions.

"This British system can act as autonomous eyes in the urban battlefield," said Defence Minister Stuart Andrew. "This technology can scan streets for enemy movements so troops can be ready for combat with quicker, more reliable information on attackers hiding around the corner.

"Investing millions in advanced technology like this will give us the edge in future battles. It also puts us in a really strong position to benefit from similar projects run by our allies as we all strive for a more secure world."

The technology has been trialled in Canada, where soldiers took part in a Contested Urban Environment experiment (CUE 18), run in a mock-up urban battlefield in Montreal. Some sensor were used on the ground while others were placed directly on the soldiers.

More than 150 government and industry scientists have been working with in excess of 80 Canadian troops in the city for three weeks, culminating in a complex exercise at an industrial location known as Silo 5, a huge abandoned grain store close to the historic Old Town area.

Canadian Troops test out the technology during a training exercise in Montreal

The British system was featured alongside a range of experimental tech from other nations, such as exoskeleton suits and night vision and surveillance systems and is set to be trialled in the UK.

Current in-service technology requires troops to man live feeds from CCTV-type systems, but the MoD said the SAPIENT tech will free troops up and reduce human error in conflict zones.

This AI system is the result of five years of research and development, jointly funded by Dstl and InnovateUK. Dstl's Chief Executive Gary Aitkenhead said: "This is a fantastic example of our world-leading expertise at its best; our scientists working with our partner nations to develop the very best technology for our military personnel now and in the future."

19/09/2018: Waitrose partners with startup's AI platform to trial two-hour delivery

Supermarket giant Waitrose & Partners has begun trialling a two-hour delivery service in London using a startup's AI platform.

On the dot, spun out of delivery firm CitySprint Group, uses machine learning to comb through a decade of data to optimise delivery routes, and increase capacity.

Waitrose's new trials, comprising a same-day and two-hour delivery service, will initially cover SW5, SW6 , SW10, WC1, WC2, EC1, CR5 and CR8. Depending on its success or failure, likely based on how many customers use the service, the supermarket will then consider rolling it out further.

"The trial enables us to meet a significant number of our customers in our heartland territory," Waitrose & Partners' head of business development Richard Ambler told IT Pro.

"We have not set an end date for the trial as we want to gain as much feedback as possible before exploring next steps.

"The trial will enable us to understand demand and ensure we best meet the needs of customers who might use this service in the future."

Customers who fall within the scope of the trial can have more than 1,500 Waitrose products dispatched to them not long after placing an order at a 5 charge.

Waitrose will exploit On the dot's fleet of 5,000 couriers, who use cargo bikes and vans to reduce emissions, and follow direction from an AI-fuelled platform that processes ten years of data to boost performance.

On the dot says its system uses intelligent predictive algorithms via machine learning models to optimise routes and responds to demand in real-time. Automatic dispatch can also be set up based on preconfigured parameters.

"Since partnering with On the dot, Wickes and its service Wickes Hourly has undertaken more than 15,000 deliveries," a spokesperson said.

"For customers using Wickes Hourly, of those ordering at a time when the option is available 40% are opting for same day delivery.

"Leveraging its existing retail estate and extending its reach, Wickes Hourly deliveries are completed over distances of less than a mile, or up to 15 miles from any of Wickes' 220 stores around the country."

The system has been adopted by more than 100 customers to date, including ASOS, Currys PC World, and Dixons.

17/09/2018: MIT uses machine learning to fill the gaps between video frames

Researchers at MIT in the US have devised a way to help artificial intelligence systems predict the motion of objects by adding extra video frames.

For humans, such inference of how objects may move is somewhat implicit, but for AI, this is a concept it struggles with. If shown three frames of a can toppling over, the first frame with neatly-stacked cans, a second one with a finger at the base, and a third with the cans toppled over, humans can quickly infer that the finger was responsible for the cans toppling over. But computers cannot make the logical inference.

However, in a paper from the Massachusetts Institute of Technology's Computer Science and Artificial Intelligence Laboratory, researchers have created something called a Temporal Relation Network (TRN) that learns how objects change in a video at different times.

"We built an artificial intelligence system to recognise the transformation of objects, rather than [the] appearance of objects," Bolei Zhou, a lead author on the paper, told MIT News. "The system doesn't go through all the frames it picks up key frames [sic] and, using the temporal relation of frames, recognize what's going on. That improves the efficiency of the system and makes it run in real time accurately."

Researchers trained technology called a convolutional neural network on three datasets. The first dataset, called Something-Something, built by the company TwentyBN, has more than 200,000 videos in 174 action categories, such as poking an object, so it falls over or lifting an object. The second dataset, Jester, contains nearly 150,000 videos with 27 different hand gestures, such as giving a thumbs-up or swiping left. The third, Charades, built by Carnegie Mellon University researchers, has nearly 10,000 videos of 157 categorised activities, such as carrying a bike or playing basketball.

The researchers then set the technology to work on video files, processing ordered frames in groups of two, three, and four spaced some time apart. It then assigned a probability of the object movement with a learned activity, such as tearing or folding a piece of paper.

With training, the technology achieved 95% accuracy for the Jester dataset. It also outperformed models on forecasting an activity, given limited frames. After processing the first 25% of frames, the module achieved accuracy several percentage points higher than a baseline mode.

The team is now planning to improve the sophistication of the technology by implementing object recognition together with activity recognition, as well as adding "intuitive physics" i.e., an understanding of the real-world properties of objects.

"Because we know a lot of the physics inside these videos, we can train module[s] to learn such physics laws and use those in recognizing new videos," Zhou said. "We also open-source all the code and models. Activity understanding is an exciting area of artificial intelligence right now."

13/09/2018: Trainline turns to Twitter and AI to alert commuters to rail disruption

Trainline is using artificial intelligence to give commuters real-time information on their daily commute.

The company has introduced automated the alerts into its Google Assistant voice app which provide personalised messages to customers about updates or disruptions to their journey in real-time.

Data scientists at Trainline have used natural language processing and machine learning to analyse data from train operators' Twitter accounts. The notification system works firstly by automatically classifying a tweet's importance. It then uses a second layer of contextual scoring, which calculates which stations the disruption is affecting, as well as how this will impact each individual train. This information is used to allow rail users to see which lines, tracks and rail services are disrupted.

Once the content and importance of the message have been determined, the AI can automatically match this to individual journeys. Trainline said these alerts, which are currently in beta, would inform of disruption "before this data is available through the national rail data feeds".

In addition to the real-time alert, customers will also be able to see the history of the disruption, so they can see its scale, when it started, how it has unfolded and what is being done to fix it.

According to Dave Slocombe, senior director of Product at Trainline, by creating an AI that can read information being shared on train operators' Twitter feeds, "we were able to overcome a key challenge in collecting data quickly from a variety of different sources".

"It's another example of how Trainline is harnessing the power of AI, big data and voice tech to make travel a smoother experience for everyone," he added.

Trainline's disruption notifications are available now in the Trainline voice app via the Google Assistant by asking it to Talk to Trainline'. There is no word on whether the app is heading to Amazon's Alexa.

21/08/2018: AI technologies are among 2018's biggest tech trends

Artificial intelligence technologies such as deep neural nets and virtual assistants are among this year's emerging technology trends, according to analyst firm Gartner.

In its annual hype cycle, which lists the emerging technology trends of 2018, 'democratised' AI - that which is available to the masses - is currently at the "peak of inflated expectations".

"Technologies representing democratised AI populate three out of five sections on the Hype Cycle, and some of them, such as deep neural nets and virtual assistants, will reach mainstream adoption in the next two to five years," said Mike Walker, research vice president at Gartner.

"Other emerging technologies of that category, such as smart robots or AI Platform-as-a-Service (PaaS), are also moving rapidly through the Hype Cycle approaching the peak and will soon have crossed it."

The hype cycle compares the expectation of technologies with the current trajectory. From innovation, through peak expectations and disillusionment to what the graph calls "plateau of productivity". No technologies were listed in the final stage, but each one has been given an estimated time frame to reach it. For example, flying autonomous vehicles, which are still in the innovation stage, are not expected to reach production within 10 years.

However, AI technologies will be virtually everywhere over the next 10 years, as they become more available to the masses, according to Gartner. It says that movements such as cloud computing and open source will eventually put AI in the hands of everyone.

Many of the technologies that are listed as being at peak expectation are estimated to reach productivity in five to ten years, such as autonomous mobile robots and smart workspaces, which disrupt businesses and the way they operate, according to Gartner.

"Business and technology leaders will continue to face rapidly accelerating technology innovation that will profoundly impact the way they engage with their workforce, collaborate with their partners, and create products and services for their customers," Walker added.

"CIOs and technology leaders should always be scanning the market along with assessing and piloting emerging technologies to identify new business opportunities with high impact potential and strategic relevance for their business."

ITPro is a global business technology website providing the latest news, analysis, and business insight for IT decision-makers. Whether it's cyber security, cloud computing, IT infrastructure, or business strategy, we aim to equip leaders with the data they need to make informed IT investments.

For regular updates delivered to your inbox and social feeds, be sure to sign up to our daily newsletter and follow on us LinkedIn and Twitter.