Inform and enhance your business with open data

There’s a vast, growing body of online information that could transform your business – for free

Data is your organisation’s most valuable resource – and that includes data you don’t own. That might sound nonsensical, but all the information generated or acquired by your company only represents a tiny fraction of what’s available to you. There’s a vast wealth of open data out there, waiting to be exploited and turned in to actionable insights.

What is open data?

Broadly speaking, open data is any information resource that’s freely accessible and legally available for third-party use. In practice, the term normally refers specifically to online datasets that anyone can download and process.

“Legally available” doesn’t necessarily mean you can treat the data as your own. There might be restrictions on what you can do with the information you download, such as a requirement to attach a credit to any derived work. Perhaps the best-known open licences are the six Creative Commons licences, but these are far from the only ones – the data owner is free to attach any sort of stipulation they like to the resource they’re offering, so it’s important to take note of the terms and understand your rights and obligations.

One institutional licence you should be aware of is the Open Government Licence. This relates to public sector information, and covers works published by the government and associated bodies such as the National Archives (which originated the licence in the first place). This licence allows for broad reuse of the relevant data, including copying, publishing and adaptation, both commercially and non-commercially – but requires users to credit the data source.

The Open Database License is also important. This is published by Open Data Commons and covers entities such as OpenStreetMap, which was its originator. This gives you an enormous amount of freedom to do what you want with the content: in the licence’s own words, you’re granted a “worldwide, royalty-free, non-exclusive, perpetual, irrevocable copyright licence to do any act that is restricted by copyright over anything within the contents, whether in the original medium or any other. These rights explicitly include commercial use, and do not exclude any field of endeavour. These rights include, without limitation, the right to sublicence the work.”

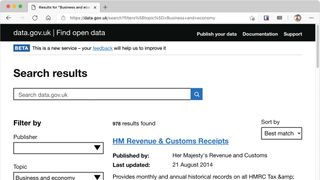

Where can I get open data?

In many territories (including the UK) the government is often the most generous and flexible source of open data – which makes sense, as it carries out a huge amount of publicly funded research. At the government’s open data portal you’ll find the government’s index of 40,000+ data sources, which the Open Data Barometer has rated the best in the world for accountability, innovation and social impact.

Nation states are far from the only providers, however – and neither are they necessarily the easiest to work with. That’s partly because the data was originally collected through multiple studies across multiple departments, so there’s little in the way of standardisation. You’ll find data sets in a wide range of formats, including XML, PDF, spreadsheets and word-processor files.

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

Private organisations are often more focused, because providing data for external use is typically more central to their mission. It’s in their interest to make sure that the information they offer conforms to a limited range of standards – and that, wherever possible, it’s accessible through an API for automated processing.

Using open data

In 2012, professional services giant Deloitte declared that data was “the new capital of the global economy”. Its vision was that “open data, not simply Big Data, will be a vital driver for growth, ingenuity and innovation in the UK economy” – and that prediction has come to pass.

The range of open data that’s now available is so broad that, in many cases, it’s possible to build core business functions on top of assets you don’t own. For example, if your building company needs a list of local planning authorities, you’ll find them in the Office for National Statistics’ Open Geography Portal. The NHS publishes health-related statistics that could inform a wide range of industries, and if you’re developing an app or website to take on TheyWorkForYou then you can get all the information you need from Parliament’s own data portal.

Cleaning and normalisation

If you’re working with a single open data source – particularly one that’s accessible using a documented API – then the business of ingesting the data and formatting it as required should be fairly simple. Once you start using data from multiple sources, though, complications quickly arise. There’s every possibility that overlapping datasets will contain either duplicate or conflicting data, and not much chance that they will be organised in the exact same way.

The first task for any business using open data is, therefore, to clean and deduplicate the incoming streams before storing them. It’s here where extract, transform, load (ETL) tools, whether commercial or developed in-house using a language such as Python or R, are invaluable. As the name implies, these tools handle the extraction of the data from the original source, convert, format and sanitise values as needed, and finally inject the output into your own data store.

Where the data can’t be easily normalised – maybe because it’s contained in structured documents such as PDFs and spreadsheets – another approach is to tag the information with metadata to make it easier to locate and make sense of at a later date.

Deriving business insights from open data

Getting the data is only half of the battle. For sure, there’s value in collating public data and republishing it in a more user-friendly way, but to make the most of open data, you need to mine it for insights. This may call for the involvement of AI or other “Big Data” techniques: datasets quickly grow too large for manual pattern-spotting, and if you’re working with live information that’s being continually updated, it’s likely to grow too quickly for human analysts to keep up with.

Sad to say, it’s not possible to entirely automate the process of turning gigabytes of numbers into useful information. Machine-learning systems need to be taught what good and bad look like, usually through training and ongoing reinforcement, or being shown what constitutes a success. Over time, iterative automation machine learning can then be used to guide the system progressively closer to the goal.

While we’re focusing on open data, it’s worth remembering that these ideas apply equally to your company’s self-generated data. External datasets that complement, inform or contrast with your own records are known as “alternative data”; they can be used to add context to in-house data, and give the algorithms a more diverse set of resources to learn from.

Harness data to reinvent your organisation

Build a data strategy for the next wave of cloud innovation

Alternative data has all sorts of uses. You’ve probably heard of British power-generating companies using TV schedules to predict spikes in demand – caused by the nation switching on kettles en masse at the end of a popular programme. Water and sewerage firms similarly use weather reports, inventories and their own network maps to predict when elements within their infrastructure will come under strain. This allows them to anticipate possible failures before they occur, and to have maintenance staff and spare parts on standby in advance.

Indeed, the exciting thing about alternative data is the potential it offers for lateral thinking. For example, TransportAPI provides live train and bus journey information, route maps and performance indicators. It’s currently used by 10,000 developers and organisations, and powers more than a quarter of all UK bus and rail operators’ apps – but the data could equally be used by property developers to plan new sites, advertising agencies to map out campaigns along train or bus routes, and taxi firms to send cabs to locations where the data shows service disruption, in the knowledge that there will be a rich seam of commuters hoping to get home.

Reading the room

Don’t think of open data purely in terms of facts and figures. Social media feeds can also be a powerful free source of real-time information. Using natural-language processing tools to analyse posts and trends allows not only for user targeting but, on a broader scale, also for gauging the popularity of a company’s actions, whether actual or potential. A hedge fund looking to sink several million pounds into a brand might use social data to find out what people think about it, and how those feelings have changed over time, in the hope of projecting any change in measured sentiment into the future.

Although this sort of analysis is more complicated than crunching numbers in Excel, the results can be of huge strategic value. As text-analysis specialist MonkeyLearn explains, “text analysis goes beyond statistics and numerical values, into the qualitative results. Text analysis doesn’t just answer what is happening, but helps you find out why it’s happening.”

In short, text analysis is less like drawing a graph and more like carrying out public opinion polling. However, because it has such a huge corpus of data to draw on, it can reach far further than would be practical with any real-world poll.

Give and take

Many of the licences under which open data is made available specifically require that derivative works be themselves made freely available for others to explore and build on. That may discourage firms who want to use external data for business-sensitive purposes.

Don’t shy away from sharing, though. The Open Data Institute argues that sharing data can contribute to companies’ bottom line by “improving market reach; supporting benchmarking and insights; driving open innovation; driving supply chain optimisation; embracing regulated data sharing; addressing sector challenges; and building trust”.

Sharing data can also just be your contribution to a better world. Online job search engine Adzuna draws on a range of open data to provide a central employment portal so that jobseekers don’t need to waste their time searching multiple agencies. It also makes its own collected data available to third parties through its API, allowing them direct access to job ads, employment data and more. During the pandemic, Adzuna’s data has helped the Office for National Statistics track the effect of Covid-19 on the economy.

Still not convinced? In its report “Sharing data to create value in the private sector”, the Open Data Institute highlights a number of options that may help to overcome companies’ reluctance to open up their data to the wider market. One possibility is the use of data trusts, which become guardians of a dataset and make decisions over how it can be used.

Ultimately, sharing data – as long as it’s not commercially sensitive and doesn’t compromise anyone’s privacy – has real potential to help businesses find new opportunities, either by partnering with its second-tier users, or by recognising further potential to develop new products of their own. And you can feel good about it too.

Nik Rawlinson is a journalist with over 20 years of experience writing for and editing some of the UK’s biggest technology magazines. He spent seven years as editor of MacUser magazine and has written for titles as diverse as Good Housekeeping, Men's Fitness, and PC Pro.

Over the years Nik has written numerous reviews and guides for ITPro, particularly on Linux distros, Windows, and other operating systems. His expertise also includes best practices for cloud apps, communications systems, and migrating between software and services.