What is natural language processing?

Creating systems capable of meaningful conversation is one of the hardest tasks facing AI researchers

From hours-long phone call queues and countless irrelevant options to some rather unhelpful advice, contacting customer support helplines are far from being anyone’s favourite pastime. However, if you recently booked an NHS appointment at your local surgery, enquired about a cancelled flight, or contacted IT support, you might have had the pleasure of experiencing a more client-friendly approach.

Customer support helplines have been significantly revamped over the last decade or so, with businesses slowly opting to use increasingly interactive automated systems. This is due to the more widespread use of machine learning technology, examples of which include voice assistants such as Google Nest and Amazon Echo’s Alexa, or even your iPhone’s Siri.

Although it might seem strange at first, automating customer service is a natural progression of implementing artificial intelligence (AI) in our daily lives. In fact, it brings in a bevy of benefits: from reducing workload of staff members to even contributing to a safer working environment by limiting the amount of verbal abuse received by frustrated customers.

The system has also become increasingly easier to use for clients. Traditional pre-recorded requests to select one of easily-forgotten numbers are slowly being replaced by systems capable of understanding which department you wish to speak with based on the words you use in the conversation. Some businesses are even opting to use chatbots, which can be integrated with customers’ account information, saving the time of having to dictate all the details mid-conversation.

None of this technology would be possible without natural language processing (NLP), which is the framework used as a basis for these advancements.

Natural language processing: It's a complex problem

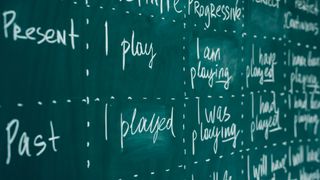

However, having an effective conversation or reading text is rife with nuances, inferences and judgements. It's one thing to break a language up into nouns, verbs, adjectives and the rest, but language is about far more than the various mechanisms that structure a sentence - and it's this added complexity that makes it difficult for computers to interpret and mimic human interaction.

The same word can have different meanings depending on the context in which it is used. Consider this example: the 'aeroplane banked to the left', or 'I banked the cheque you gave me'. How about 'I stood at the bow of the ship' compared to 'Mark tied his shoelaces into a bow' - not to mention the act of bowing to an audience or the device used to shoot an arrow.

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

Knowing how 'bow' is used in each of those sentences depends on the context provided by the surrounding words. Yet sometimes the context of the surrounding words doesn't help either. For example, consider "I saw the hiker on the path with binoculars". Who has the binoculars - me or the hiker?

The English language is heavily reliant on context, something that non-English speakers have difficulties with, never mind a machine. And that's before we start factoring in various highly-personal traits that characterise the person speaking, such as irony, sarcasm and humour, which even us humans can find difficult to infer from sentences alone.

Natural language processing: What is it capable of?

Natural language processing helps overcome any problems associated with understanding spoken language by combining data and algorithms to create context. There are two main aspects to how this works - syntax and semantics.

Syntax is by far the easiest of the two to apply, given that it deals with clearly defined rules. This includes dictionary definitions, the structure of sentences and, most notably, parsing, which relates to understanding what the sentence actually means. With the 'binocular' example above, parsing helps to identify who they belong to by using earlier or later sentences.

With natural language processing, algorithms are able to apply a number of different syntactic processing techniques to help break down these rules. These include: 'word segmentation', which dissects large chunks of text into distinct sections; 'stemming', which involves refining an inflected word to its root form; and morphological segmentation, which involves breaking a word down into its smallest meaningful units, such as 'in', 'come', '-ing' for 'incoming'.

Semantics, however, is far less structured and usually the more difficult to interpret. This aspect involves understanding the meaning of individual words within the broader context of the sentence. As with the examples above, this is understanding the various and most appropriate uses of the word 'bow'.

Semantic analysis can include 'named entry recognition', which involves figuring out which pieces of text map to proper names, and then determining what type of name it is, whether that's a person's name or the name of a location. Systems can rely on the convention of capitalising the first letter to easily identify a given name, however, this is also true of the first letter of a sentence and many organisation names do not capitalise every letter. What's more, capitalisation rules are not uniform across languages - in German, every noun is capitalised, for example.

This is symptomatic of semantic analysis in general - although there are techniques available, there are a number of hurdles still left to overcome.

Natural language processing: Are we there yet?

The very nature of human language, which has evolved over thousands of years, means computer systems need to be flexible, capable of accommodating approximations, and ultimately, able to make educated guesses.

M&S has already replaced the entirety of its call centre staff with software based on NLP

Systems based on natural language processing are still being developed, and only fairly rudimentary models are widely available for public use. Smart assistants such as Siri, Alexa and Google Home, are essentially just question and answer machines and are not capable of making sense of conversation.

Apple, Amazon, Google, IBM and other major technology players are all working on their own NLP systems to address a variety of challenges. These include systems capable of analysing large streams of text in documents, assessing emails to help sift junk mail, and maintaining conversations as part of a customer service tool.

The sophistication of online chatbots is improving all the time, but they are still only really capable of handling simple question and answer interactions and require the user to provide specific targetted keywords. Complaints are often escalated to a human employee once a problem becomes more nuanced, which, unfortunately for the customer, doesn't take much doing.

We're a long way off the seamless interaction that the customer service industry envisages.

Dale Walker is the Managing Editor of ITPro, and its sibling sites CloudPro and ChannelPro. Dale has a keen interest in IT regulations, data protection, and cyber security. He spent a number of years reporting for ITPro from numerous domestic and international events, including IBM, Red Hat, Google, and has been a regular reporter for Microsoft's various yearly showcases, including Ignite.