Security experts develop method of generating 'highly evasive' polymorphic malware using ChatGPT

Researchers at CyberArk Labs developed a novel method to generate malware using text that goes largely undetected by signature-based antimalware products

Security researchers have demonstrated that ChatGPT can generate polymorphic malware that goes undetected by “most anti-malware products”.

It took experts at CyberArk Labs weeks to create a proof-of-concept for the highly evasive malware but finally developed a way to execute payloads using text prompts on a victim’s PC.

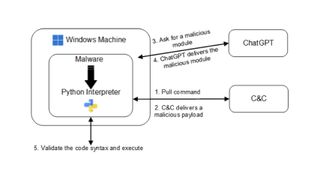

Testing the method on Windows, the researchers said a malware package could be created that contained a Python interpreter and this can be programmed to periodically query ChatGPT for new modules.

These modules could contain code - in the form of text - defining the functionality of the malware, such as code injection, file encryption, or persistence.

The malware package would then be responsible for checking if the code would function as intended on the target system.

This could be achieved, the researchers noted, through an interaction between the malware and a command and control (C2) server.

In a use case involving a file decryption module - functionality would come from a ChatGPT query in the form of text and the malware would then generate a test file for the C2 server to validate.

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

If successfully validated, the malware would be instructed to execute the code, encrypting the files. If validation wasn’t achieved, the process would repeat until working encryption code could be generated and validated.

The malware would utilise the built-in Python interpreter’s compile functionality to convert the payload code string into a code object which could then be executed on the victim’s machine.

“The concept of creating polymorphic malware using ChatGPT may seem daunting, but in reality, its implementation is relatively straightforward,” the researchers said.

“By utilising ChatGPT's ability to generate various persistence techniques, anti-VM modules and other malicious payloads, the possibilities for malware development are vast.

“While we have not delved into the details of communication with the C2 server, there are several ways that this can be done discreetly without raising suspicion.”

The researchers said this method demonstrates the possibility to develop malware that executes new or modified code, making it polymorphic in nature.

Bypassing ChatGPT’s content filter

Storage's role in addressing the challenges of ensuring cyber resilience

Understanding the role of data storage in cyber resiliency

ChatGPT’s web version is known for blocking certain text prompts due to the malicious code it could output.

CyberArk’s researchers found a workaround which involved using the unofficial open-sourced project which allows individuals to interact with ChatGPT’s API - which hasn’t yet been officially released.

The project’s owner said in the project’s documentation that the application uses a reverse-engineered version of the official OpenAI API.

They found that by using this version, the researchers could make use of ChatGPT’s technology while bypassing its content filters which would ordinarily block the generation of malicious code in the widely used web app.

“It is interesting to note that when using the API, the ChatGPT system does not seem to utilise its content filter,” the researchers said.

“It is unclear why this is the case, but it makes our task much easier as the web version tends to become bogged down with more complex requests.”

Evading security products

Due to the malware detecting incoming payloads in the form of text, rather than binaries, CyberArk’s researchers said the malware doesn’t contain suspicious logic while in memory, meaning it can evade most of the security products it tested against.

It’s especially evasive from products that rely on signature-based detection and will bypass anti-malware scanning interface (AMSI) measures as it ultimately runs Python code, they said.

“The malware doesn't contain any malicious code on the disk as it receives the code from ChatGPT, then validates it, and then executes it without leaving a trace in memory,” said Eran Shimony, principal cyber researcher at CyberArk Labs, to IT Pro.

“Polymorphic malware is very difficult for security products to deal with because you can't really sign them,” he added. “Besides, they usually don't leave any trace on the file system as their malicious code is only handled while in memory. Additionally, if one views the executable, it probably looks benign.”

“A very real concern”

The researchers said that a cyber attack using this method of malware delivery “is not just a hypothetical scenario but a very real concern”.

When asked about the day-to-day risk to IT teams around the world, Shimony cited the issues with detection as a chief concern.

“Most anti-malware products are not aware of the malware,” he said. “Further research is needed to make anti-malware solutions better against it.”

Connor Jones has been at the forefront of global cyber security news coverage for the past few years, breaking developments on major stories such as LockBit’s ransomware attack on Royal Mail International, and many others. He has also made sporadic appearances on the ITPro Podcast discussing topics from home desk setups all the way to hacking systems using prosthetic limbs. He has a master’s degree in Magazine Journalism from the University of Sheffield, and has previously written for the likes of Red Bull Esports and UNILAD tech during his career that started in 2015.