Google wants to create an AI musician by teaching it our music history

Researchers hope the software will learn how to create new compositions from existing songs

Google wants to create AI's first musician by teaching it about humankind's history of music.

The Magenta system should be able to generate its own music and art based on just a few notes fed to it by a human being, reports Quartz.

Google Brain researcher Douglas Eck demonstrated the software at the annual Moogfest music and tech festival, explaining that the idea is for Magenta to learn from existing compositions to create its own works of art.

Eck did admit, however, that AI systems are "far from long narrative arcs". While music is the focus for now, the same tactic could theoretically be used for other art forms such as video.

The tools used for Magenta will be made available to the public, much like TensorFlow, Google's open-source software library for machine learning. One such tool is a program that will allow researchers to upload music files into TensorFlow, training the system.

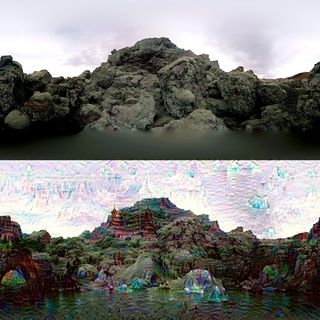

Google's latest project was inspired by its Deep Dream experiments (pictured), an image recognition tool that allowed users to create dreamscape versions of their own images. By over-interpreting images, the software took otherwise benign details and transformed them into shapes such as animals.

Earlier this month, Google began feeding its AI engine with text from romance novels in order to teach it more about human personality and conversation.

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

Caroline has been writing about technology for more than a decade, switching between consumer smart home news and reviews and in-depth B2B industry coverage. In addition to her work for IT Pro and Cloud Pro, she has contributed to a number of titles including Expert Reviews, TechRadar, The Week and many more. She is currently the smart home editor across Future Publishing's homes titles.

You can get in touch with Caroline via email at caroline.preece@futurenet.com.