What is machine learning and why is it important?

No longer confined to the world of science fiction, machine learning represents a new frontier in technology

Machine learning (ML) is the process of teaching a computer system to make predictions based on a set of data. By feeding a system a series of trial and error scenarios, machine learning researchers strive to create artificially intelligent systems that can analyse data, answer questions, and make decisions on their own.

Machine learning often uses algorithms based on test data, which assist with inference and pattern recognition in future decisions, removing the need for explicit instructions from humans that traditional computer software requires.

What is machine learning?

Machine learning relies on a large amount of data, which is fed into algorithms in order to produce a model off of which the system predicts its future decisions. For example, if the data you’re using is a list of fruit you’ve eaten for lunch every day for a year, you would be able to use a prediction algorithm to build a model for which fruits you were likely to eat when in the following year.

The process is based on trial and error scenarios, usually using more than one algorithm. These algorithms are classed as linear models, non-linear models, or even neural networks. They will be ultimately dependent on the set of data you’re working with and the question you’re trying to answer.

What are the types of machine learning algorithms?

Machine learning algorithms learn and improve over time using data, and do not require human instruction. The algorithms are split into three types: supervised, unsupervised, and reinforcement learning. Each type of learning has a different purpose and enables data to be used in different ways.

Supervised learning

Supervised learning involves labelled training data, which is used by an algorithm to learn the mapping function that turns input variables into an output variable to solve equations. Within this are two types of supervised learning: classification, which is used to predict the outcome of a given sample when the output is in the form of a category, and regression, which is used to predict the outcome of a given sample when the output variable is a real value, such as a 'salary' or a 'weight'.

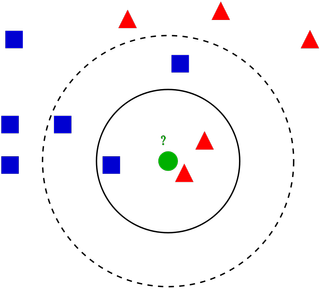

An example of a supervised learning model is the K-Nearest Neighbors (KNN) algorithm, which is a method of pattern recognition. KNN essentially involves using a chart to reach an educated guess on the classification of an object based on the spread of similar objects nearby.

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

In the chart above, the green circle represents an as-yet unclassified object, which can only belong to one of two possible categories: blue squares or red triangles. In order to identify what category it belongs to, the algorithm will analyse what objects are nearest to it on the chart – in this case, the algorithm will reasonably assume that the green circle should belong to the red triangle category.

Unsupervised learning

Unsupervised learning models are used when there is only input variables and no corresponding output variables. It uses unlabelled training data to model the underlying structure of the data.

There are three types of unsupervised learning algorithms: association, which is extensively used in market-basket analysis; clustering, which is used to match samples similar to objects within another cluster; and dimensionality reduction, which is used to trim the number of variables within a data set while keeping its important information intact.

Reinforcement learning

Reinforcement learning allows an agent to decide its next action based on its current state by learning behaviors that will maximize a reward. It's often used in gaming environments where an algorithm is provided with the rules and tasked with solving the challenge in the most efficient way possible. The model will start out randomly at first, but over time, through trial and error, it will learn where and when it needs to move in the game to maximize points.

In this type of training, the reward is simply a state associated with a positive outcome. For example, an algorithm will be 'rewarded' with a task completion if it is able to keep a car on a road without hitting obstacles.

What is machine learning used for?

Organizations can pull in information from multiple sources. Whether that is from changing customer behaviors or even the actions of their staff members, the volume of data available is almost limitless. However, the sizes of these potential datasets pose a problem when it comes to analyzing and, ultimately, using the information.

This is where ML comes into play; it can pull that data together and find the patterns or the information it needs to make predictions. An easy example of this is in medical analysis where human eyes would need years and years to find all the necessary patterns from a thousand MRI scans. A device with ML software can be trained on that data and discover the key details in a matter of seconds – though only if the information has a correct label.

Every day uses of machine learning

One of the most famous examples of machine learning is a service most people use every day: Google Search. Web searching with Google harnesses the power of many different ML algorithms, some of which can also analyze and read the text that is typed into it.

More algorithms are then used to tailor the search results to suit the user's previous searches – and hopefully make it more personalized for them. For example, the term "Java" could simply drag up results for the programming language, however, if the user has a search history full of coffee products, that's more likely to be suggested to them.

For businesses, ML use cases range from employee monitoring software to endpoint security platforms. Security software is often built with ML that can analyze attack patterns and use the information to detect potential threats early on.

Machine learning data bias

Bias in data has been a hot topic of debate for many years, and may become more problematic as we expand the use of ML technology into public-facing systems and services.

Three keys that enable project teams to succeed in their AI goals

Bias is not always easy to spot, and can exist in the data itself. If an organisation seeks to employ more diversely, for example, but only uses CVs belonging to its present workers as the test data, then the ML application may inadvertently favor candidates that look like existing staff.

Some governments have been spooked by this form of machine learning and it has caused a number to implement regulations that aim to limit its use. In the UK, the Cabinet Office's Race Disparity Unit and the Centre for Data Ethics and Innovation (CDEI) teamed up to research potential bias in algorithmic decision-making. The US government also decided to pilot diversity regulations for AI research that minimize the risk of racial or sexual bias in computer systems.

What machine learning can and cannot do

To understand what ML can and can't do, first, we must discard most of our pop culture references and largely ignore what film and TV may have suggested. Some mindfulness is also needed because you might be both disappointed at what it can just about do right now but also amazed by how it is being used.

What can machine learning be used for?

- Voice recognition

- Text to speech transcription

- Provide recommendations depending on search term

- Image recognition

WHAT CAN'T MACHINE LEARNING BE USED FOR?

- Recognising human intentions

- Market analysis

- Recognise cause and effect relationships

- Making ethical or moral decisions by itself

Bobby Hellard is ITPro's Reviews Editor and has worked on CloudPro and ChannelPro since 2018. In his time at ITPro, Bobby has covered stories for all the major technology companies, such as Apple, Microsoft, Amazon and Facebook, and regularly attends industry-leading events such as AWS Re:Invent and Google Cloud Next.

Bobby mainly covers hardware reviews, but you will also recognize him as the face of many of our video reviews of laptops and smartphones.