Google's radar-based gesture sensor given the go-ahead

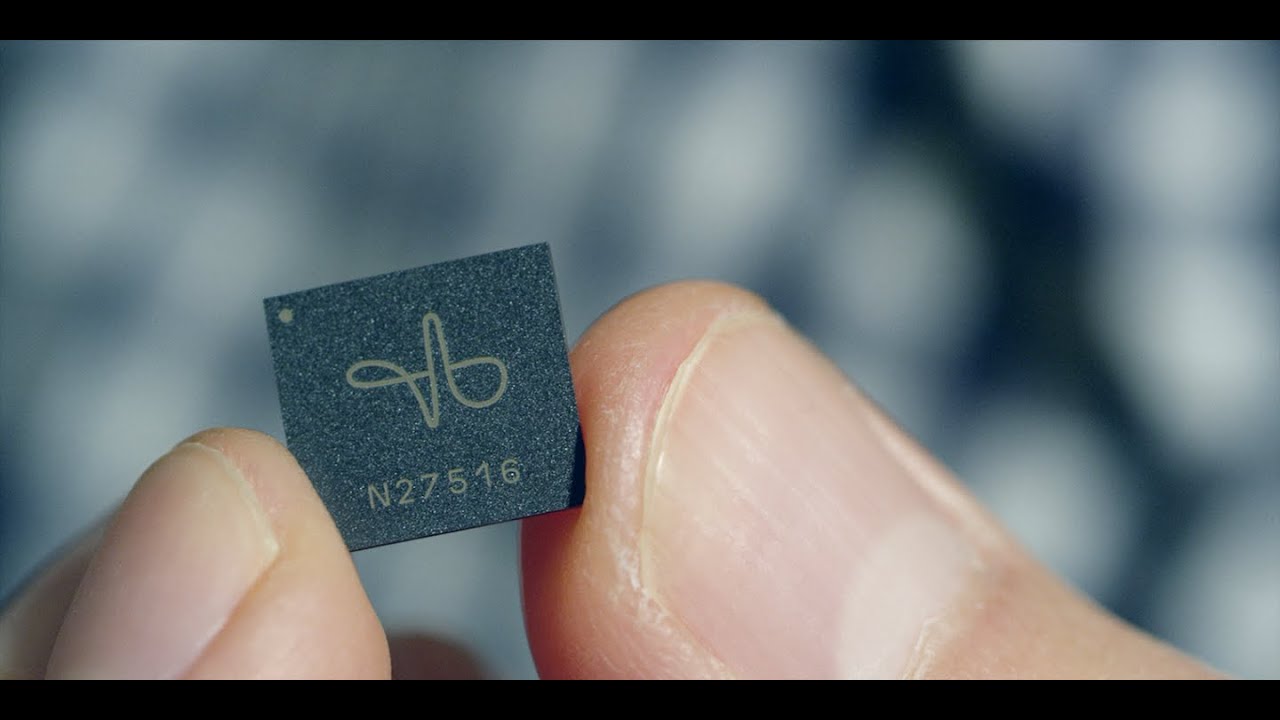

Tiny chips use radar signals to create virtual controls for devices

Google has been given the green light by the FCC to push forward with a radar-based sensor that can recognise hand gestures, with the technology being pegged as a new way to control smartphones and IoT devices.

Project Soli, which is a form of sensor technology that works by emitting electromagnetic waves in a broad beam, was initially blocked due to concerns it would disrupt existing technology.

Radar beam interpreting hand gestures - courtesy of Google

Objects within the beam scatter energy, reflecting some portion back towards a radar antenna. The signals reflected back capture information about its characteristics and dynamics, including size, shape, orientation, material, distance and velocity.

However, it's taken Google a number of years to get project Soli going as initially its radar system was unable to accurately pick up user gestures and had trouble isolating each motion. Google attributed these problems to the low power levels the smartwatch had to operate on due to FCC restrictions.

The tech giant applied for a waiver from the FCC to operate at higher power levels, something that was initially protested by Facebook as it claimed higher power levels could interfere with existing technology. This dispute has been settled by the two companies and Google has been granted a waiver after the FCC determined that project Soli could serve the public interest and had little potential for causing harm.

The approval means that Soli can move forward and create a new way to interact with technology. Due to the small size of the chips, it can be fitted into wearables, smartphones and many IoT devices.

Get the ITPro. daily newsletter

Receive our latest news, industry updates, featured resources and more. Sign up today to receive our FREE report on AI cyber crime & security - newly updated for 2024.

Carsten Schwesig, the design lead of project Soli, said his team wanted to create virtual tools because they recognised that there are certain control actions, such as a pinch movement, that can be read fairly easily.

"Imagine a button between your thumb and index finger the button's not there, but pressing it is a very clear action and there is a very natural haptic feedback that occurs as you perform that action," he said.

"The hand can both embody a virtual tool and it can also be acting on the virtual tool at the same time. So if we can recognise that action, we have an interesting direction for interacting with technology."

There is currently no indication as to when the company plans to roll out the new technology.

Bobby Hellard is ITPro's Reviews Editor and has worked on CloudPro and ChannelPro since 2018. In his time at ITPro, Bobby has covered stories for all the major technology companies, such as Apple, Microsoft, Amazon and Facebook, and regularly attends industry-leading events such as AWS Re:Invent and Google Cloud Next.

Bobby mainly covers hardware reviews, but you will also recognize him as the face of many of our video reviews of laptops and smartphones.

Most Popular